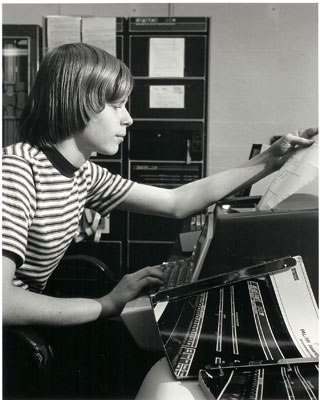

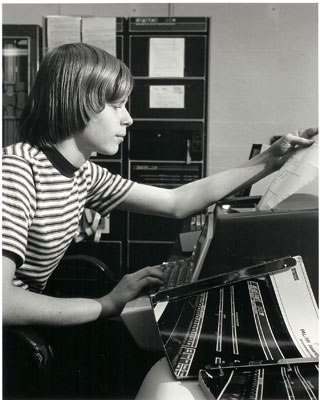

I just stumbled upon a picture of Jonathan Edwards of subtext fame. Here it is, for your convenience:

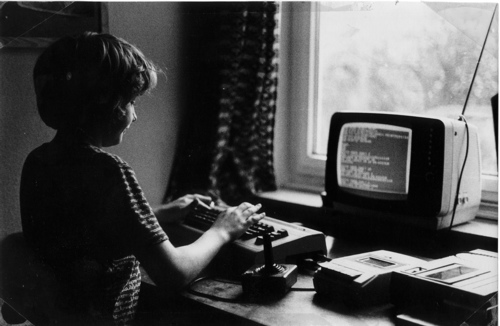

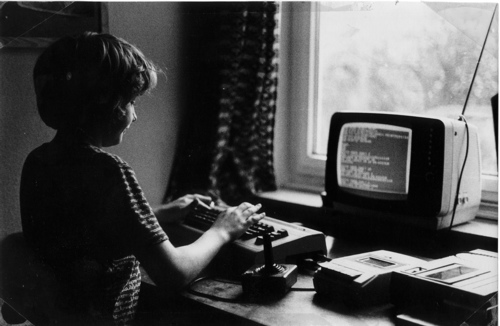

And it somehow reminded me… well, you tell.

I just stumbled upon a picture of Jonathan Edwards of subtext fame. Here it is, for your convenience:

And it somehow reminded me… well, you tell.

When I first heard Ken Schwaber’s talk I thought wow, exactly, he’s right, we don’t have a chance, the end of the world is nigh.

When you have some central piece of software that many other projects rely upon, that central track is often pressed into delivering features earlier than is good for the software. As a result, you get software that

Ken Schwaber calls this “Design Dead” Software. Implying: throw away, don’t reuse any more, open source or sell.

…but couldn’t you say the same about any piece of code? Some simple facts: Overstaffing a project is not an economic idea. The power to change things in unforeseen ways implies the power to break things – the difference being made by the developer’s understanding of the consequences. The ability to understand code depends not just on the code, but on the newcomer’s background knowledge. What remains “magic” depends on your willingness to understand, which to a large degree is a social and personal thing. Something that is easy to use the way it was designed, or the way it is implicitly used by its fans, may be very hard to use in a different way or for a different purpose. A test harness appears especially bad if you don’t understand what it tests, or if it does not test the cases you consider relevant.

Which, to me, means that a “central” piece of software essentially poses a knowledge management problem. Personell turnover is inevitable in the long run (be it because of boredom or because of high pressure at the “center”), so there will be new people with fresh views coming in contact with the code – which, if not organized very carefully, causes the kind of friction described above. Also, stability is of high concern at the center, so it may be difficult to take new or current architectural ideas into account (there are limits to what refactoring can do). Which, again, might make it less attractive to work on (someone else’s baby). Old software is most probably “Hype Dead Software”.

Providing and maintaining a framework, platform, infrastructure appeals to software people because abstraction is their daily bread. But don’t fool yourself: it requires a long-term commitment, and in the end may be more about duty and reliability than about fun. And it’s an open question whether the problem you have abstracted and solved will actually be relevant, and will be considered relevant and valuable, long-term.

Programming is all about confronting the unknown.

FDD – Fear Driven Development

AOP – Angst Oriented Programming

DSL – Dread Specific Language

MDA – Mental Disorder Architecture

RAD – Reckless Application Development

XP – Jesus Christ!

E2.0 – Escapism 2.0

Thanks for the inspiring discussion, mabuse.

If you start by planning to add lots of features, add them in ways that introduce weaknesses and restrictions, then refuse to remove or replace any of your additions as a way of repairing the damage, you end up with a mess that piles workaround on top of workaround and calls them features.

Scheme, like Java, (like me?) is growing up and losing focus.

“The biggest advantage I can see is […] that the Java language can be extended along certain lines without waiting for the fairly long release cycle to approve and produce something new. It should also take some pressure off the compiler writers, in that some features which previously would require a language change can, in some fashion, be accomplished with closures.”

…so keyword arguments and map constructor syntax will be next?

Wow, if I had known my previous post would be read with such scrutiny, I might have left it to ripe a bit longer. I’m really happy to have helped ignite such a balanced and informed discussion!

Originally I intended to post the following as a comment on Reginald’s blog, but I was unable to keep my prose down to a reasonable size… blogs are not the perfect medium for a discussion, but here we go.

The point I tried to make about type checking is that the type checker has no meaningful way to report typing problems if you don’t give him the names, but just a knot of function types. Similarly, if everything is just a list (as in ideal Lisp), the runtime errors can speak of nothing but cons cells.

Reginald, I’m glad you think that the importance of naming things is “obvious” – some programmers appear to think otherwise. Someone might find the task of making up good names unnecessary, or challenging, so they skip it where possible (especially when working in a haste, especially those with a maths/physics background who have learned to deal with pure structure). I consider this bad practice for software, and therefore would not want a programming language to actively encourage conceptual anonymity.

I probably mistook the point of your original post because you set out to answer the question “why should I care about closures, as Java programmer”, but described closures from a purely functional perspective. I believe Java programmers need to care about closures not because the functional paradigm is great, but because of how closures enrich OO programming.

Haskell and ML are much better at domain modelling than Scheme, because of their type system (which is notably absent in Ruby) We can assume “functional-style” programming in Ruby resembles Scheme more than ML, so that’s what I was relating to, given that your examples were using Ruby.

Closures in Smalltalk were originally not full static closures – they were used mostly for control structures and iteration. The full power of closures (as in Scheme etc.) was only added in a later iteration. Which seems to indicate to me that their full power was not required for the “prime use cases” of OO programming – you could still refactor to class-based objects when you needed more.

The Undo/Redo stack is a perfect example of something that should NOT be done using closures. Because each command is not one, but two closures (one for Redo, one for Undo), so which is your object identity?; a command may have a name you want to present to the user (“Undo move” vs. “Undo delete”); and when your software is supposed to persist a command log, or merge commands executed by different users on the same document, you will need a much deeper analysis (instead of just execution) of the commands. Of course you can try to refactor at some point, but searching for all usages and implementations of “Closure.apply” will not produce very meaningful results.

Transactions are another example of more than just a closure – they’ll have an isolation level, a name, an identifier,… Obtaining these property values certainly requires a broader interface than closure.apply(), or another object to wrap the closure, again splitting up object identity. What transactions do have is a Single Abstract Method – did I mention I like the CICE proposal?

So I actually would like a language that is “opinionated” in the sense that it gives guidance. Would you really want to program in an opinion-less language, if such a thing can be imagined at all? How would two pieces of code in that language every fit together? In the Tycoon libraries, each data structure was implemented three times, once functional, once imperative and once OO. Seems wasteful, doesn’t it? All language designs I like express the opinion that “goto” should be rarely used. Don’t you think? In a similar vein, I would like a language to express that most things ought to be named and related to domain concepts.

The Java blogosphere is currently discovering or introducing to the uninitiated the benefits of closures. To me, posts like Reginald’s seem to present closures as an advance from OO. This is kind of strange, given that the historical order is exactly the other way round!

When I started digging into OO languages in the 90s, I had already spent some time with Scheme and Tycoon (a language based on Luca Cardelli‘s Quest), all of them higher-order functional-imperative programming languages. I did some non-trivial compiler programming in both languages, and it certainly was fun, in a way similar to solving math riddles.

I learned that OO was great because it names things. OO allows a traceable connection between the conceptual design level and the implementation level. Concepts have names, so you can talk about them, between programmers and architects. The promise of OO was to replace the dazzling complexity (and intellectual challenge) of functional programming with something more manageable.

Language is not understood best when you have removed as much as possible. Would you rather read a novel, or an algebra textbook? Just imagine taking your green, yellow and red pen and marking up a book by Neal Stephenson.

Conciseness comes at a price: The more compressed your code, the harder it is to understand what the consequence of some change would be. If you have left out everything you do not need for your current goal, what if the goal moves? If (e.g.) you find that what was once a closure should now be a class, e.g. because some code wants to inspect it instead of just running it, you’ll be crying for another super-advanced refactoring IDE.

“Everyone knows that debugging is twice as hard as writing a program in the first place. So if you’re as clever as you can be when you write it, how will you ever debug it?” (Kernighan)

Higher-higher-order functions and inferred types, or lists of lists of pairs whose cdr happens to represent some concept (except when it represents another concept) will all give you that occasional “ouch, there’s a error message, but I don’t think there should be!” The code looks fine, so why does the type checker not like it? (it probably took something you wrote, took it all wrong, drew impressive conclusions and is now reporting a problem at a completely different place). The program state and the code both look fine, but they differ in the level of nesting of some unnamed construct (e.g. a closure or a list where a value was expected).

I strongly associate higher-order functional programming with long stretches of uninterrupted concentrated programming work, and single-person projects. In contrast, OO gives you a pragmatic, shallow paradigm for making software intelligible and available for cooperation.

Closures can be a nice tool for creating your own DSL, to make your code get closer to the design model. But closures can also take you further away from the design model, by creating constructs that only make sense in the abstract.

Object-oriented programming was introduced to fill a need not satisfied by functional and imperative languages. Remember: Going from functional to OO is an advance.

“In theory, practice is just like the theory, but in practice, practice is nothing like the theory. Or so the theory goes.”

“If it quacks like a dove, walks like a bear, and looks like a postbox, it’s not a duck, even if theoretically it still fits the definition of one, for all practical intents and purposes.”

Another extremely funny rant by reinier on the Raganwald blog…

A few months ago I tried to implement some refactorings for the Subtext “programming language”. I think I now understand some reasons why that did not work out.

A refactoring changes the shape of your program, while maintaining the program’s behaviour. You do it so the code gets into a shape that makes the changes you are aiming for easier to apply, and makes it easier to reason about their correctness. The correctness of the initial refactoring is guaranteed by your IDE.

Note that even a refactoring is a change; it is only guaranteed to be “correct” as long as you live in a closed world, where all the code you might interact with is contained in your IDE project. As soon as you leave that safe haven, using type casts, reflection, dynamic linking, or when you guarantee backwards compatibility for published interfaces, that’s over.

So, what about Subtext?

Firstly, Subtext does not have an external “behaviour” that might be observed. It just has a system state, and it’s up to the user to observe any interesting bits of that system state at any time, and even in retrospect. The system state encompasses both code, data, past states and an operation history. It’s all the same, actually, just nested nodes.

This makes it somewhat difficult to separate a program’s behaviour from the program itself. The solution I tried for transferring the refactoring idea is to guarantee some property of some usage of a node before and after a refactoring: “When invoked as a function with the same arguments as before the refactoring, the result subtree will look the same” – seems quite straightforward. I made a whole catalog of these.

However, here comes “Secondly”: Secondy, Subtext is completely untyped – at a sufficient level of abstraction, function invocation is the same as copying with changes, and is also the same as assignment, method overriding, and object creation, and it is exactly at this level that Subtext operates. Also, all the innards of a function are accessible (and overridable), not just its result. So essentially the only way to know what a “function” does to its arguments is to run the function. Especially, due to untyped higher-order data flow, an automated analysis can’t really tell where a node modified by the refactoring may eventually be copied to. But without this information, the refactoring cannot guarantee much about the system state. (I tried some heuristics, which turned out either too coarse or felt incomprehensible to a user).

Static types and other distinctions made by the programming language form the contract between you and your tools. As long as you stick to the types, your tools can and will help you. If you don’t have a type system, or the type system is too clever, you do not notice when you cross the line, and your tools become unreliable.

If a project is small enough and short-lived enough, and the language concise enough, you may not need tools as desperately. Some people also find tools like Eclipse or IntelliJ Idea too complex or heavy-weight and prefer to stick to their ASCII editor, and therefore focus on the “conciseness” aspect, or restrict the scope of their project, or rely on tests. That’s OK as long as you realize that by liberating yourself from types, you are also limiting yourself in various ways.

“Academic discussions won’t get you a million new users. You need faith-based arguments. People have to watch you having fun, and envy you.”

Steve Yegge points out that culture matters, and may make Ruby the Next Big Thing.

When asked why we are using Java at work, I said “because we have to do things the hard way” – it’s the way that’s guaranteed to work, rock-solid, interoperable, stable, enough skilled engineers out there in the market place, enough googleable solutions for our everyday problems, will be actively maintained for years to come, Not My Fault if it does not run, etc.

If Ruby ever gets to that point, great. But until that day, everyone in middle management does their best to ignore the fun factor, and engineers will keep dreaming (of using Ruby, of owning an Apple Mac, of a world without deadlines, legacy and momentum).

The hard way means: having to wait until the Next Big Thing is the Current Big Thing.